Dataset Nutrition Label

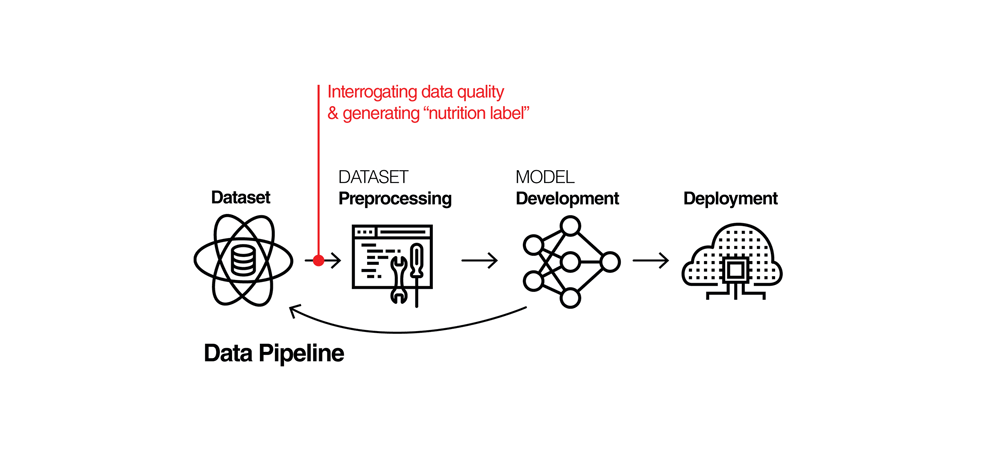

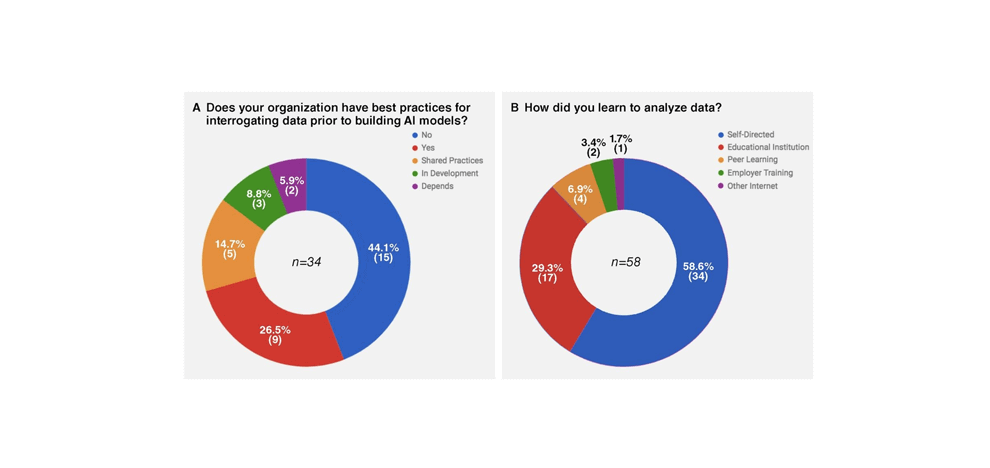

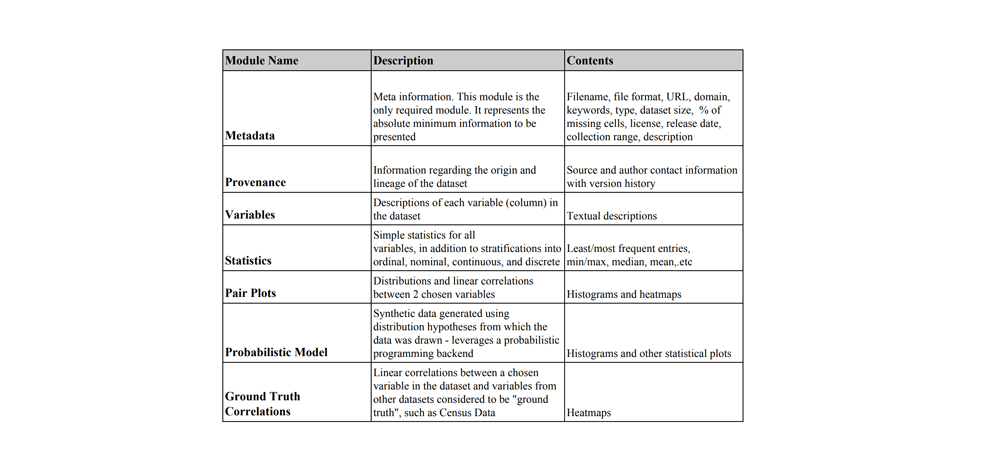

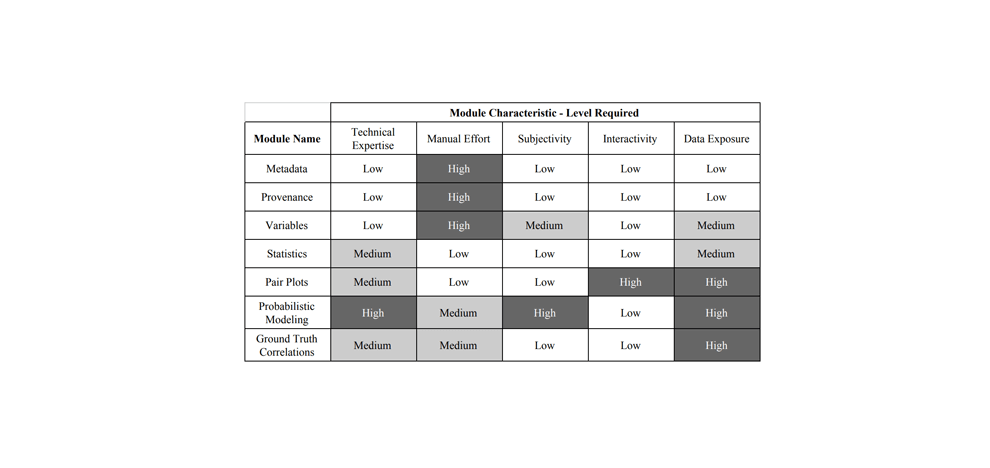

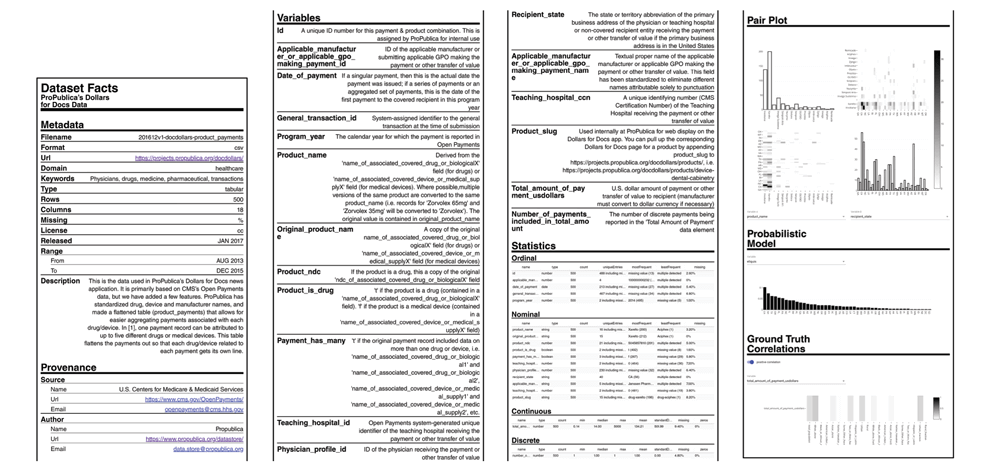

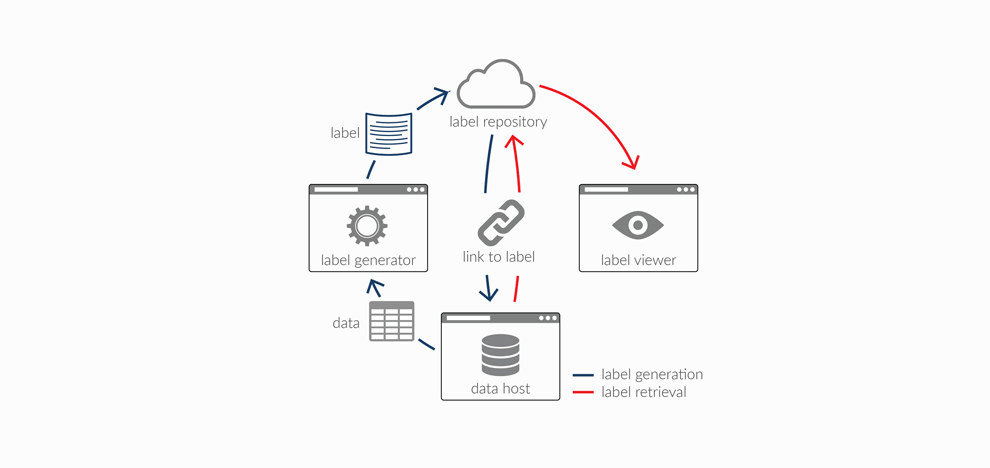

Artificial intelligence (AI) systems built on incomplete or biased data will often exhibit problematic outcomes. Current methods of data analysis, particularly before model development, are costly and not standardized. The Dataset Nutrition Label (the Label) is a diagnostic framework that lowers the barrier to standardized data analysis by providing a distilled yet comprehensive overview of dataset "ingredients" before AI model development. Building a Label that can be applied across domains and data types requires that the framework itself be flexible and adaptable; as such, the Label is comprised of diverse qualitative and quantitative modules generated through multiple statistical and probabilistic modelling backends, but displayed in a standardized format. To demonstrate and advance this concept, we generated and published an open source prototype with seven sample modules on the ProPublica Dollars for Docs dataset. The benefits of the Label are manyfold. For data specialists, the Label will drive more robust data analysis practices, provide an efficient way to select the best dataset for their purposes, and increase the overall quality of AI models as a result of more robust training datasets and the ability to check for issues at the time of model development. For those building and publishing datasets, the Label creates an expectation of explanation, which will drive better data collection practices. We also explore the limitations of the Label, including the challenges of generalizing across diverse datasets, and the risk of using "ground truth" data as a comparison dataset. We discuss ways to move forward given the limitations identified. Lastly, we lay out future directions for the Dataset Nutrition Label project, including research and public policy agendas to further advance consideration of the concept.

The manuscript can be found here, in addition to the project's homepage and prototype demo.